SPRING 2021, THE EVIDENCE FORUM, WHITE PAPER

Jennifer N. Hill, MA Implementation Science Research Scientist Patient-Centered Research Evidera, a PPD business |  Larissa Stassek, MPH Senior Research Associate Patient-Centered Research Evidera, a PPD business |  Emma Low, PhD Senior Research Associate Patient-Centered Research Evidera, a PPD business |  Sonal Mansukhani, PhD, MBA, BPharm Former Senior Research Associate Patient-Centered Research Evidera, a PPD business |  Elizabeth Bacci, PhD Senior Research Scientist Patient-Centered Research Evidera, a PPD business |

What is Implementation Science?

The hook that implementation scientists often use to drive home the importance of their work is that it takes an average of 17 years for evidence to be implemented into practice and only 14% of original research will reach patients.1,2 But what is implementation science?

While there are several different definitions of implementation science, it is broadly defined as the scientific study of methods to promote systematic uptake of research findings and other evidence-based practices into routine practice, and hence, to improve the quality and effectiveness of health services and care.3 It is also referred to as dissemination and implementation research or knowledge translation.4

Who are the stakeholders and what is the value proposition?

Everyone benefits from implementation science, including hospital administrators, providers and other healthcare professionals, pharmacists, health insurers, policymakers, regulators, pharmaceutical companies, caregivers, and, most importantly, patients.

The value of implementation science is becoming clearer as we deal with resource constraints. Utilization and evaluation of evidence-based strategies is essential to ensuring that investments in research are contributing to increased use of evidence while maximizing healthcare value and improving public health.5,6,7

Implementation Science Study Designs

What does an implementation science study look like?

Clinical research and implementation science share similarly rigorous approaches to scientific study. While clinical trials are largely focused on establishing effectiveness (tolerating), implementation science is focused on understanding and addressing barriers and facilitators to the uptake of evidence-based practices and interventions in the context in which they are being introduced.8

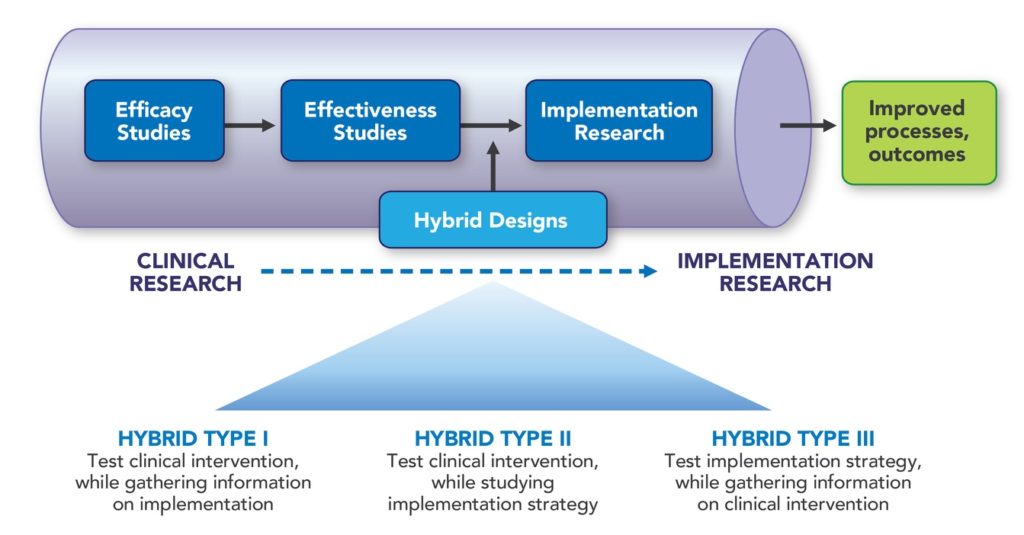

Implementation can be considered throughout the research pipeline, but implementation science studies may come after, or in combination with, effectiveness studies (See Figure 1). These combination studies are considered hybrid designs and there are three different type.9 Hybrid designs are usually most appropriate for studies with minimal risk interventions (i.e., those with at least some evidence of effectiveness and strong face validity, to support use of the intervention in a new way such as setting, population, or method of delivery).10

- Hybrid Type I designs are primarily focused on testing and collecting evidence of the clinical intervention while gathering some data on implementation, such as acceptability or feasibility.

- Hybrid Type II designs typically place an equal emphasis on testing the clinical intervention and the implementation strategy.

- Hybrid Type III designs are typically focused on testing the implementation strategy, such as fidelity and adoption, while collecting some data on effectiveness.

Selecting a hybrid design depends on the level of evidence available on the intervention, the trial population and information available to support the implementation strategy. In an implementation trial, the scientists have an evidence-based intervention or practice that needs uptake, and the implementation expert is testing hypotheses regarding modified strategies for uptake in a new setting, as well as fidelity to those plans.11

The Role of Continuous Quality Improvement

Continuous Quality Improvement (CQI) involves incremental and iterative assessments of improvement based on small and/or large changes to processes or delivery of evidence-based practices or interventions. Goals may include, but are not limited to:

- Improvement of processes (e.g., system or clinic levels)

- Individual-level outcomes (e.g., patient, clinician)

- Regulatory outcomes (e.g., improved safety)13

Designs or methodologies for these types of improvement studies may include Plan-Do-Study-Act (PDSA)14 cycles or Six Sigma (which follows the problem-solving process of Define, Measure, Analyze, Improve and Control [DMAIC]).15 In CQI studies, improvements are made, the effect of those improvements are assessed, and the cycle is repeated until the desired outcome is achieved.13 Data collection strategies used in this study are similar to program evaluation or implementation studies, but the cycle for analysis is typically much quicker as the feedback is fed directly back into the study and immediately acted upon.16,17

Methods and Data Collection

Implementation science studies can be retrospective (e.g., large scale comparative case studies or retrospective assessment of factors impacting implementation) or prospective in design, (e.g., collecting data during an implementation trial for the purposes of testing specific hypotheses) or may be a combination of both. These studies may also be guided by a framework or theory that informs the design and conduct of the study, the design of data collection instruments, and the reporting of study findings.

Implementation science studies typically employ a mix of methods such as use of quantitative data (e.g., administrative data or data produced from databases or systems, closed-ended survey questions or measures, source documents, etc.) and qualitative data (e.g., interviews or focus groups, open-ended survey questions, meeting notes/minutes, etc.). Due to the diverse nature of implementation study designs and objectives, a variety of analytic approaches may be used to assess data from these various sources, including traditional statistical and/or qualitative approaches, rapid analysis techniques,16,17 or triangulation of the data from the various data sources.18,19

Data collected within an implementation study are often complex and may be collected at several different levels such as system level (governmental or policy), organizational level, site level (provider team or group level), and the patient level.20 Outcomes may include, but are not limited to, knowledge or attitude change, behavior change, health-related outcomes or changes, process-related changes, and policy or system-related changes.

How does program evaluation fit in?

Program evaluation can be, and often is, considered under the umbrella of implementation science. Program evaluations may be designed retrospectively, prospectively, or both, and are usually guided by an evaluation framework. Program evaluations typically involve engaging stakeholders, sometimes from multiple groups, in describing and establishing the design of an evaluation. They include identifying key questions, indicators to measure key outcomes, and collection of data from many different sources such as existing data/documents or newly collected data from surveys, focus groups, or interviews. Program evaluation requires a synthesis of the findings while considering the needs of the stakeholder, as well as a review and agreement of the conclusions of the evaluation among the stakeholder groups. This review of conclusions is a critical step to ensure that the results of the evaluation will be used for program improvement. It is important that the results produced from a program evaluation tie back to the purposes identified early in the evaluation and that the results are provided in a way that can be used and shared broadly with other stakeholder groups.

Example Implementation Science Studies

The following sections provide examples of how to utilize implementation science to address different research needs.

DAILY ORAL (AT-HOME) TREATMENT VS. INJECTABLE (IN-CLINIC) TREATMENT

Population: Two studies, both of patients and providers, with one undertaken in the United States and one in Europe

Challenge: How to most effectively implement a new, long-acting injectable treatment that requires regular visits to the clinic, as opposed to daily oral medication self-administered at home, which is the current standard of care. Due to the different route of treatment and the need for more frequent clinic visits, the sponsor was interested in identifying barriers and facilitators involved in making this treatment shift.

Approach: Both studies utilized implementation science frameworks within their design. The US-based study used the Consolidated Framework for Implementation Research (CFIR)21 whereas the European-based study utilized the Exploration, Preparation, Implementation and Sustainment Framework (EPIS) alongside outcomes guidelines developed by Proctor et al.22 Similarly, both studies utilized a mixed methods approach involving individual surveys and one-on-one interviews. The US-based study adopted a single arm approach with all sites receiving the same implementation support, including eight monthly facilitation calls with clinic staff. The European-based study used a two-arm study design in which the standard arm sites received traditional implementation support, and the enhanced arm sites received additional meetings and trainings. The latter arm also participated in CQI calls involving the development of plans to address challenges.

Stakeholders: Patients, doctors, nurses, and administrative clinic staff responsible for implementing the treatment, and the sponsor.

Key Findings: Through the surveys, interviews, and facilitation or CQI calls, stakeholders offered feedback on facilitators and barriers to successful implementation. This has allowed the research team and sponsor to better understand who is best suited for the new treatment, what types of clinics and settings may need additional support in implementation, and strategies for patients and clinics to be more successful in the transition to this new treatment. The study findings will be used to help advise and support clinical sites in the effective implementation of this new treatment in a real-world setting.

CAN A HEALTHCARE APP IMPACT CLINICAL OUTCOMES?

Population: Patients attending a specialty care clinic and providers at the specialty care clinic

Challenge: Evaluate a new app designed to track potential patient symptoms and exacerbations of new symptoms over time, provide resources to patients, and increase the ability of patients to communicate with their care team.

Approach: The patient interface is linked to a clinician dashboard where patient responses are tracked and responded to by the patient’s clinical team in real time. Using a mixed methods design, including techniques such as one-on-one qualitative interviews with patient and clinical site users, patient surveys, and other quantitative usage metrics, evidence can be evaluated with the hope of improving the quality of the electronic system in clinical practice and determining if the app impacted clinical outcomes.

Key Findings: The results of this study will be disseminated in early 2022.

STUDYING PROGRAM IMPACT THROUGH RETROSPECTIVE AND PROSPECTIVE DATA

Population: Individuals from funding partner’s organization, individuals from leadership at program partner, and individuals from the field involved in the program

Challenge: Evaluate a program to understand its impact since inception (retrospective data) as well as at the current stage (prospective data). Though the program has been funded for nearly five years, efforts to study the impacts have been largely informal. A dedicated evaluation was requested to support decisions that would inform future funding.

Approach: The evaluation followed the Centers for Disease Control and Prevention framework for program evaluation23 which focuses on producing results that are the most salient while reinforcing the integrity and quality of the evaluation. The framework involves engaging stakeholders, describing the program, focusing the evaluation design, gathering credible evidence, justifying conclusions, ensuring use, and sharing lessons.

Stakeholders: The funding partner that provided guidance on aspects of program development and the partner responsible for the conduct of the program.

Key Findings: The evaluation provided key information on areas of strength and challenge within the program and areas of greatest impact. The findings and recommendations produced from the program evaluation were immediately used in presentations to high-level decision makers for the purpose of informing conversations about priorities for future focus.

Conclusion

Implementation science studies often consider multiple factors that may serve as barriers and/or facilitators at the system level, site level, or individual level. Analyses may include a mix of existing data or data collected specifically for the purposes of the assessment. Data may also be collected from a variety of sources, over multiple timepoints throughout an assessment and may carry over into a long-term assessment of sustainability. Implementation science plays a critical role in producing evidence-based strategies and supporting the uptake of evidence-based practices and interventions, with the goal of improving healthcare and patient outcomes.

References

- Balas EA, Weingarten S, Garb CT, Blumenthal D, Boren SA, Brown GD. Improving Preventive Care by Prompting Physicians. Arch Intern Med. 2000 Feb 14;160(3):301-8. doi: 10.1001/archinte.160.3.301.

- Morris ZS, Wooding S, Grant J. The Answer is 17 Years, What is the Question: Understanding Time Lags in Translational Research. J R Soc Med. 2011 Dec;104(12):510-20. doi: 10.1258/jrsm.2011.110180.

- Eccles MP, Mittman BS. Welcome to Implementation Science. Implement Sci. 2006 Feb 22;1(1). 1. doi: 10.1186/1748-5908-1-1.

- Glasgow RE, Vinson C, Chambers D, Khoury MJ, Kaplan RM, Hunter C. National Institutes of Health Approaches to Dissemination and Implementation Science: Current and Future Directions. Am J Public Health. 2012 Jul;102(7):1274-81. doi: 10.2105/AJPH.2012.300755. Epub 2012 May 17.

- Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. BMC Psychol. An Introduction to Implementation Science for the Non-Specialist. 2015 Sep 16;3(1):32. doi: 10.1186/s40359-015-0089-9.

- Eisman AB, Kilbourne AM, Dopp AR, Saldana L, Eisenberg D. Economic Evaluation in Implementation Science: Making the Business Case for Implementation Strategies. Psychiatry Res. 2020 Jan;283:112433. doi: 10.1016/j.psychres.2019.06.008. Epub 2019 Jun 7.

- Kilbourne AM, Glasgow RE, Chambers DA. What Can Implementation Science Do for You? Key Success Stories from the Field. J Gen Intern Med. 2020 Nov;35(Suppl 2):783-787. doi: 10.1007/s11606-020-06174-6.

- Bauer MS, Kirchner J. Implementation Science: What Is It and Why Should I Care? Psychiatry Res. 2020 Jan;283:112376. doi: 10.1016/j.psychres.2019.04.025. Epub 2019 Apr 23.

- Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-Implementation Hybrid Designs: Combining Elements of Clinical Effectiveness and Implementation Research to Enhance Public Health Impact. Med Care. 2012 Mar;50(3):217-26. doi: 10.1097/MLR.0b013e3182408812.

- Bernet AC, Willens DE, Bauer MS. Effectiveness-Implementation Hybrid Designs: Implications for Quality Improvement Science. Implement Sci. 2013 Apr;8(1):1-2. doi: 10.1186/1748-5908-8-S1-S2.

- Stetler CB, Legro MW, Wallace CM, Bowman C et al. The Role of Formative Evaluation in Implementation Research and the QUERI Experience. J Gen Intern Med. 2006 Feb;21 Suppl 2(Suppl 2):S1-8. doi: 10.1111/j.1525-1497.2006.00355.x.

- Douglas NF, Campbell WN, Hinckley JJ. Implementation Science: Buzzword or Game Changer? J Speech Lang Hear Res. 2015 Dec;58(6):S1827-36. doi: 10.1044/2015_JSLHR-L-15-0302.

- National Center for Biotechnology Information. O’Donnell B, Gupta V. Continuous Quality Improvement. Available at: https://www.ncbi.nlm.nih.gov/books/NBK559239/. Accessed March 17, 2021.

- Donnelly P, Kirk P. Use the PDSA Model for Effective Change Management. Educ Prim Care. 2015 Jul;26(4):279-81. doi: 10.1080/14739879.2015.11494356.

- Bandyopadhyay JK, Coppens K. Six Sigma Approach to Healthcare Quality and Productivity Management. Intl J Prod Qual Manag. 2005 Dec;5(1):v1-2.

- Hamilton AB, Finley EP. Qualitative Methods in Implementation Research: An Introduction. Psychiatry Res. 2019 Oct;280:112516. doi: 10.1016/j.psychres.2019.112516. Epub 2019 Aug 10.

- Taylor B, Henshall C, Kenyon S, Litchfield I, Greenfield S. Can Rapid Approaches to Qualitative Analysis Deliver Timely, Valid Findings to Clinical Leaders? A Mixed Methods Study Comparing Rapid and Thematic Analysis. BMJ Open. 2018 Oct 8;8(10):e019993. doi: 10.1136/bmjopen-2017-019993.

- Tonkin-Crine S, Anthierens S, Hood K, Yardley L et al. Discrepancies Between Qualitative and Quantitative Evaluation of Randomised Controlled Trial Results: Achieving Clarity Through Mixed Methods Triangulation. Implement Sci. 2016 May 12;11:66. doi: 10.1186/s13012-016-0436-0.

- Palinkas LA, Cooper BR. Mixed Methods Evaluation in Dissemination and Implementation Science. In Brownson RC, Colditz GA, Proctor EK. Dissemination and Implementation Research in Health: Translating Science to Practice. Oxford University Press. 2017 Nov 10; 2:225-249. doi.org/10.1093/oso/9780190683214.003.0020.

- Ferlie EB, Shortell SM. Improving the Quality of Health Care in the United Kingdom and the United States: A Framework for Change. Milbank Q. 2001;79(2):281-315. doi: 10.1111/1468-0009.00206.

- Damschroder LJ, Aron DC, Keith RE et al. Fostering Implementation of Health Services Research Findings into Practice: A Consolidated Framework for Advancing Implementation Science. Implement Sci. 2009 Aug 7;4:50. doi: 10.1186/1748-5908-4-50.

- Proctor E, Silmere H, Raghavan R et al. Outcomes for Implementation Research: Conceptual Distinctions, Measurement Challenges, and Research Agenda. Adm Policy Ment Health. 2011 Mar;38(2):65-76. doi: 10.1007/s10488-010-0319-7.

- Framework for Program Evaluation in Public Health. MMWR Recomm Rep. 1999 Sep 17;48(RR-11):1-40.